Most VR isn’t even VR at all.

So how do you make great VR?

The basic principle of virtual reality headsets is to have each eye see a slightly different scenes per frame to ensure correct parallax and depth cues known as “stereo rendering”. In a 3D behavioral task this is considerably more complex than a 3D movie as in the digital movie the scenes are pre-rendered, a process which can take hours for each frame (e.g. 29 hours to render a single frame of Monsters University in 2D). For movies this is typically using what is known as a render farm which is basically a warehouse full of high end computers. In a 3D behavioral task the task is vastly more complex as by the very nature of things we don’t know what the user is going to do next. The camera angle shifts unpredictably. The position and path changes. And because these things are happening in real time it turns out that we have to recalculate everything every 10 milliseconds or so. Any slower and the lag between optical input and vestibular input causes nausea and disorientation. Effectively it means that the refresh rate of the images must not fall below 90Hz.

PC 3D graphics, like on screen games or so called on screen VR (which is a misnomer) there are soft real-time requirements, where maintaining 30-60 FPS has been adequate. True VR turns graphics into more of a hard real-time problem, as each missed frame is visible. Continuously missing framerate is a jarring, uncomfortable experience. As a result, processing headroom becomes critical in absorbing unexpected system or content performance gaps.

It is essential to reliably reach frame rates exceeding 90 FPS in order to avoid this issue, v-synced and unbuffered. Lag and dropped frames produce judder which is discomforting and quickly nauseating in VR.

What is more, for an immersive experience the angle of view needs to be relatively wide while to maintain stereopsis without excessive aliasing the resolution needs to be high in the foveal region. That means that while a displayed resolution of 2160 x 1200 (about four times the pixels of an iPhone 6S or a MacBook Air) the system has to render a “viewing buffer” of 1.4x the size of the 2160 x 1200 resolution. This results in a true render resolution of 3024×1680, or 1512×1680 in each eye. The purpose of the viewing buffer is to compensate for the distortion of the headset’s lenses. With a rendering resolution of 3024×1680 at a 90Hz refresh rate, this creates a graphical demand of up to 457 million pixels per second.

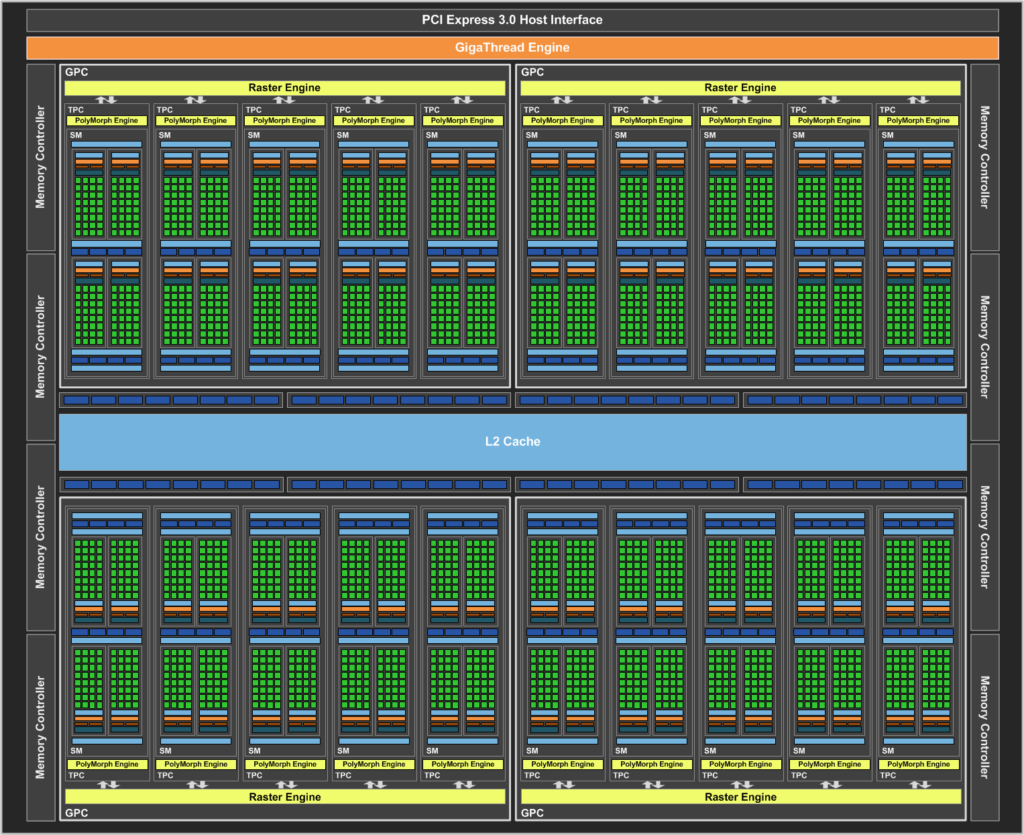

We create highly optimized cutting edge code – maybe the top 5% of C# programmers make the grade and no individual has all the skills used in the HVS 4D VR. For our early version released and displayed at Society for Neuroscience 2016 we used a a computational engine with 2560 cores that provided 9 TFLOPS of performance – nearly 100 times the speed of a high end Intel Core i7 980 XE which achieves 109 gigaFLOPS. For each subsequent release the technology leads the market in the same way.